Here are a few photos from last night’s NIME concert.

Tag Archives: Music

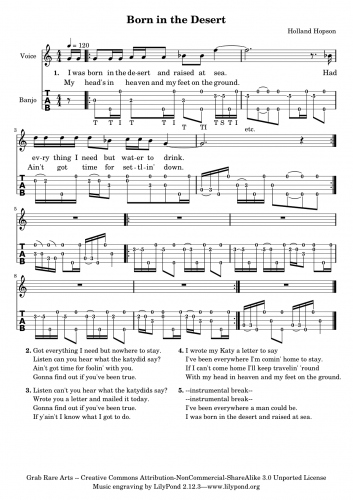

Hopping Around the LilyPond

I’ve been experimenting recently with the open source music notation software LilyPond (thanks CDM!). I had looked into it in 2005 or 2006 and couldn’t understand how it would be of much use to me at the time. I think I recognized the flexibility of LilyPond, but couldn’t justify learning a scripting language (programming language?) when Finale met most of my notation needs. Since then I’ve become increasingly frustrated with Finale–more reluctant than ever to shell out more money for another incremental upgrade that never seems to provide the features I need. At the same time I’ve become more interested in creating non-standard notation, and so I developed a workflow in which only bare bones musical notation was produced using Finale, with the rest of the layout work done in a graphic design program like Illustrator. I’ve also been working more with tablature for five-string banjo, which has never felt comfortable in Finale.

So when I came across LilyPond this time I immediately noticed the support for non-standard notation, everything from Gregorian chant notation to proportional notation found in contemporary music, and of course, tablature. I also saw examples of common (for me) notation tweaks and features such as staves without bar lines; staves in multiple, simultaneous time signatures (that align!); note heads without stems; staves without staff lines (blank staves); note clusters; quarter tones; various accidental-handling schemes, etc. More encouraging was the fact that these features, while not always highlighted, also weren’t buried in the back of the documentation and were presented as viable (even desirable) notational choices. And did I mention that the output looks amazing! LilyPond produces gorgeous scores.

With those encouraging signs I jumped right into the tutorial and quickly decided I would try to notate some of my songs for five-string banjo. After about about a week of daily half-hour sessions I had a usable score for “Born in the Desert” and a new found enthusiasm for LilyPond.

Download the score as a pdf file: born_in_the_desert.pdf

Download the score as a LilyPond .ly file: born_in_the_desert.ly

Working with LilyPond

LilyPond uses a scripting language for all input: there’s no GUI, no point-and-click, no pretty graphics. I suspect my initial reluctance to try LilyPond the first time around was largely a result of this structure. (“What? I have to type out a bunch of ASCII? And then compile it to see the result?”) After a short time with the language, however, I found I quite enjoyed working without a GUI. Here are a few reasons why:

WYSIWYM: LilyPond operates on a “What You See Is What You Mean” principle. I like the fact that I can encode the information that’s important to me separately from its presentation. Working with tablature provides a good example. In some tablature programs, your data only “looks” like actual notation. Behind the scenes it’s actually a collection of graphics commands and specifications–a drawing that resembles music. If you decide later to extract the notation, or even simply transpose it or try an alternate tuning, you quickly find you’re stuck; there’s no (musically significant) “there” there. In LilyPond, you encode the important data and then decide how to present it. If you later change your mind about the presentation, the original data is still around, preserved in a musically sensible manner.

Variables: I may be more of a programmer than I realize or admit to being, since I immediately grabbed on to the powerful options presented by variables. Anything that can be represented in LilyPond can be assigned to a variable: from a recurring rhythmic motive, to a sequence of pitches, to an entire staff of notation. This provides composers with the ability to generate a personal “language” of modules.

I used two simple variables in the score for “Born in the Desert”. One holds a recurring “pinch” gesture that will be familiar to fingerpicking guitar and banjo players. I named it “pinch”

pinch =

{

< g’\5 d’ >8

}

This simply creates an eighth-note chord consisting of a d and g above middle c. The “\5” after the g tells LilyPond that this note belongs on the fifth string.

The other variable contains a similar sequence of notes, a sixteenth-note g on the fifth string played by the thumb followed by a d on the first string plucked by the index finger. I named this variable “ti” for thumb-index.

ti = {

g’16\5 d’

}

My use of these variables is far from earth-shattering but felt wonderfully convenient. Every time I needed to represent these gestures all I had to do was type \pinch or \ti.

Note to self: I enjoyed how useful writing comments to myself could be. If something wasn’t working exactly how I wanted it I could simply add a comment like “% ??? what’s going on here? why is the second verse not aligning the way I expect?” or “% FIX This bar may need to be transposed.” These kinds of placeholders are nothing special in a word-processing environment, but when working with a graphical notation tool I often resorted to maintaining a separate “to do” file that was clumsy to use since it required including wayfinders like: “violin part, page 6, bar 23, beat 1: …”

And here are a few frustrations with Lilypond, all having to do with its script-based nature:

“error: failed…”: Seemingly trivial lapses in syntax can produce disastrous results. More than once I forgot a curly-brace or a quote and witnessed a dismaying string of errors pop up in the console when I tried to compile the score. A little bit of code management and common sense goes a long way here, such as indenting blocks of code and adding plenty of comments. I also found working with LilyPond within jEdit provided excellent color-coding of syntax.

Move it!: Sometimes I wanted to nudge a notational element or move staves a bit closer together. With Finale or Sibelius there is often a simple click-and-drag solution. in LilyPond I was back to poring over the documentation to find the correct \override statement and which engraver to invoke. Sound tricky? It is. And for simple fixes it was frustrating (though I’m sure with time the simple \overrides would become second nature). The one consolation is that the same \override principles are used to move practically _anything_ in LilyPond, providing a degree of flexibility that Finale or Sibelius simply don’t offer.

LilyPond Life

My next step with LilyPond will be producing scores for more banjo tunes to be released in conjunction with my upcoming CD. I’m particularly interested to find out how flexible the tuning schemes for tablature can be. LilyPond’s banjo tablature supports a number of common tunings already. I wonder how will it handle the non-standard tunings in some of my pieces. Then I hope to tackle some of the more outlandish notational problems in my existing works that have previously prevented me from rendering them electronically.

Upcoming in Australia

I’m prepping for my upcoming Australia visit and getting more and more excited about the trip. I’ll be participating in the New Interfaces for Musical Expression++ (NIME) Conference hosted by the University of Technology Sydney. The conference looks to be chock full of workshops and events including keynotes by Stelarc and Nic Collins. I’ll be performing twice as part of the conference:

I’m prepping for my upcoming Australia visit and getting more and more excited about the trip. I’ll be participating in the New Interfaces for Musical Expression++ (NIME) Conference hosted by the University of Technology Sydney. The conference looks to be chock full of workshops and events including keynotes by Stelarc and Nic Collins. I’ll be performing twice as part of the conference:

- On Thursday, June 17 at 7pm I’ll perform Life on (Planet) for 2 rocks and computer. The concert will be at the Australian Broadcasting Corporation’s Eugene Goossens Hall. The featured performers are Ensemble Offspring.

- On Friday, June 18 at 5:30 pm I’m performing a set of works for banjo and electronics. The concert will be on the University of Technology Sydney campus at the Bon Marche Studio.

Then I’m excited to play with bassist Mike Majkowski on Saturday, June 19 at 7pm. We”ll improvise together as part of the Left Coast Festival 2010 at the Sedition Gallery. The gallery is apparently a working barber shop by day–sounds like a unique venue.

Throw in a Dorkbot gathering, the usual conference geekery, and some sightseeing and this should be a busy busy trip.

60×60=360

I’m pleased to be a (small) part of the most recent round of 60×60 events presented by Vox Novus. 60×60 is an hour-long concert featuring 60 1-minute pieces by 60 different composers. This year there are 6 different 60×60 concerts, totaling 360 pieces. Here’s what 60×60 director Robert Voisey says about the events:

“This is an event like no other event you have been to before. Every minute there is a new piece. 60×60 represents a diverse cross-section of music with different styles and aesthetics. I can pretty much guarantee that you will love one of the pieces, it is a pretty good bet you will not like one of the pieces; regardless of whether you like or dislike the work in 60 seconds you will hear a new piece.”

There have been about a dozen events already including 60×60 Dance – “Order of Magnitude Mix” that happened this weekend at McGill University. Many others are upcoming, such as the presentations associated with ICMC 2010 at the Electronic Music Foundation Creative Resource Center (today and tomorrow, June 2 and 3) and Stony Brook University (June 3-5). Here’s a list of all six 60×60 mixes and every composer represented.

River of Drone II: Seven Hours of Sound at the SoundBarn

I’m looking forward to floating in the River of Drone II: Seven Hours of Sound.

Sunday May 16th

12 noon to 7pm

at the SoundBarn in Valatie, NY.

The Albany Times Union wrote a preview here. Find more information on the Albany Sonic Arts Collective site or below.

Albany Sonic Arts Collective (ASAC) and The soundBarn are proud to present River of Drone II: Seven Hours of Sound. A very special, long-form event, River of Drone II is a free, seven-hour, improvised sound performance from 12 noon – 7 pm on Sunday May 16 at The soundBarn, Valatie, NY.

River of Drone II: Seven Hours of Sound is a collective sonic improvisation that will unfold and develop unlike any ordinary concert. From quiet, peaceful soundscapes to full-on noise, the ever-shifting rhythm, pace and mood will evolve as the ebb and flow of performers, energy and instrumentation progresses through the seven hour performance.

Set in a former orchard cooler with views of the Catskill Mountains, The soundBarn is a uniquely suited location for visitors to lounge, listen and linger for an hour, a few minutes or the entire performance. The audience is encouraged to make themselves comfortable and to bring pillows, chairs, food and drink. Unlike a traditional concert setting, performers will be located throughout the venue and listeners are encouraged to move around, watch the accompanying video projections, wander in and out and discover new relationships to sound through immersion, reflection, deep listening, meditation, and concentration.

We hope you will join us to listen, meditate, self-hypnotize, bliss out– or to simply enjoy a swim in the RIVER OF DRONE!

River of Drone II is a collaborative presentation of Albany Sonic Arts Collective and The soundBarn and will be presented at The soundBarn.

Featured musicians include: Jason Cosco, Matt Ernst, Tara Fracalossi, Eric Hardiman (Rambutan, Century Plants, Burnt Hills,) Ray Hare (Century Plants, Fossils From the Sun, Burnt Hills,) Holland Hopson, Thomas Lail (soundBarn,) Patrick Weklar (soundBarn) Matt Weston (Barn Owls), Mike Bullock, Linda Aubry Bullock, Mark Lunt, Chris Bassett, Jeremy Kelly, and many more special guests.

Videos by: Tara Fracalossi, Kyra Garrigue and more.

The soundBarn is a project of artist/musician Thomas Lail and artist Tara Fracalossi and is located on what was once Heald Orchards in Valatie, New York. The soundBarn is sited in a modern addition to the orchard’s 100 year old Dutch style barn. The cavernous, heavily insulated space served as the orchard’s cooler where apples and pears were over-wintered and chilled by the massive, still visible refrigeration system.

Remember the first River of Drone? Listen to some of the first River of Drone.

ASAC Presents Defragmented: Marko Timlin and thenumber46

Albany Sonic Arts Collective presents Defragmented: A concert of emergent systems featuring Marko Timlin and thenumber46 (Suzanne Thorpe + Philip White).

Saturday April 10th

Upstate Artists Guild

247 Lark St.

Albany, NY

8PM

Suggested Donation $5 (all proceeds go to touring performers)

This concert features Finnish- based composer/sound artist Marko Timlin alongside thenumber46, the collaborative effort of electro-acoustic flutist Suzanne Thorpe and electronic musician Philip White. Both Timlin and thenumber46 employ improvisation and non-linear analog systems to create music in which a delicate balance exists between the human and machine. A music at once intuitive and mechanical. Explosive and subdued. Violent and meditative.

More about the artists after the jump.

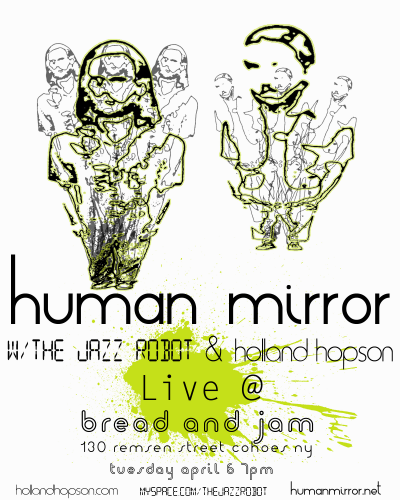

Human Mirror with The Jazz Robot and Holland Hopson

I’m excited about this show on Tuesday, April 6 at Bread and Jam in Cohoes, NY featuring Human Mirror and The Jazz Robot. I’ll be opening the show with banjo and electronics. Come early; The music starts at 7pm.

ATU Preview of Lucre/Chen

The Albany Times Union has a preview of Sunday’s Albany Sonic Arts Collective concert featuring Lucre and Jonathan Chen.

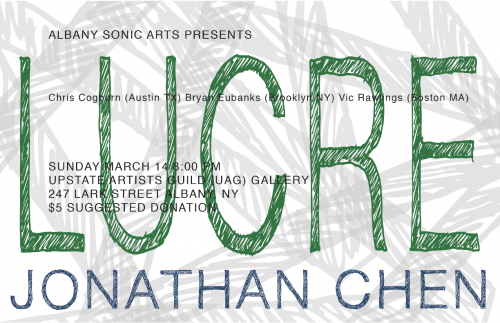

ASAC Presents Lucre and Jonathan Chen

Albany Sonic Arts Presents Lucre and Jonathan Chen

Sunday March 14 @ 8pm

Upstate Artists Guild

247 Lark St.

Albany NY

$5 suggested donation

- Lucre is the improvising trio of Chris Cogburn , Bryan Eubanks, and Vic Rawlings who perform with exposed circuits, extended ampli?ed cello, low-? modular synthesis, and stripped down percussion.

- Jonathan Chen will perform a solo set of music for electronics, viola and violin.

Chris is a good friend from my Austin days and does great work with the No Idea Festival. I’m very excited he’ll be playing in Albany. And I’m equally excited that local artist Jonathan Chen is finally getting a chance to present his work.

More information about the artists after the break…

Max Multitrack Mojo

I’m in the middle of recording a number of my pieces for banjo and electronics for a forthcoming CD. (Stay tuned for more info!) All of the works involve live, interactive processing of the banjo sound and sometimes the voice as well. This processing is done in Max and is driven by analysis of both audio inputs and sensor inputs. All of this is geek speak to say that every time I perform the piece it sounds a little different, and sometimes markedly so. This can make recording the pieces tricky. Especially since most of the music we hear is assembled like a layer cake: each part recorded separately and then mixed together after the fact (with yummy frosting…). Not a workable option for my process.

Straight to “Tape”

My previous approach to recording followed a “live to 2 track” design. I would play the piece and capture the input sounds along with whatever sounds were generated by my Max patch. The results were certainly true to life, represented my live performances well, and usually sounded fine. Occasionally, though, I’d wish for more flexibility to tweak the sounds, particularly the vocal or banjo sounds since I don’t have the luxury of recording in the world’s greatest sounding room. So I looked into ways to expand the number of available tracks.

Multitrack Multitudes

I played around with Soundflower, Rewire and Jack in various combinations and sometimes got things working pretty well. But the setups never completely gelled for me–partly because I felt constrained by the number of available outputs on my aging MOTU interface, partly because I needed as much available CPU for running my patches and couldn’t spare enough to run my DAW at the same time. So I eventually went back to recording everything in Max using a very slightly modified version of the quickrecord utility. This turned out to be a great way to break out individual tracks for further EQing during the mixing stage. One drawback was having to split the multichannel file into individual stereo or mono files. (Audacity and ProTools both do this very well. If only AudioFinder would support multichannel files…) But mostly I still felt constrained by the limited number of outputs on my audio interface; I often resorted to creating submixes of individual elements in Max in order to cram all the sounds into the available channels. With 10 outputs available I’d use the first 2 for monitoring while recording, 3 or 4 for live mics, leaving only 4 or 5 for sounds generated by Max.

Aggregrate Device – Duh!

Just the other day I had a breakthrough realization: I could use a Soundflower aggregate device to address many more output channels than are physically available on my interface. Now I’ve got channels to spare. I’m kicking myself for not thinking of this sooner. The biggest drawback? Now I’ll be spending much more time in mixing mode. I wonder when I’ll ever get this CD finished…?